Maze is now generally available, deployed in customers worldwide, and actively investigating vulnerabilities right now.

Your vulnerability management process feels like it’s broken. Shocking, we know. This isn’t anything 50 vendors before us haven’t already said.

It's not because you're doing it wrong. It's not because your team isn't talented. It's because the game is getting exponentially harder, and the approaches you’ve relied on can't keep up.

40% more CVEs are being found each year. AI is allowing attackers to exploit them faster than ever. No wonder it feels hard.

Do you have endless new findings that are all ‘critical’? Does it feel like you’d need 10 more people to triage them all properly? And that your dev team couldn’t patch every critical, even if they wanted to?

None of it is adding up.

So you're doing what everyone does: making your best guess about what matters, hoping developers don’t hate you, and trying not to think too hard about what you might be missing.

We watched too many brilliant security and development teams waste their time on vulnerabilities that never mattered in the first place. 99% of the vulnerabilities teams face today are irrelevant, whilst attackers are getting orders of magnitude better at exploiting the 1% that matter. We need to stop iterating on old approaches to vulnerability management that barely worked in the first place, and lean into drastically better solutions. That’s why we built Maze.

The Reality of Your Vulnerability Backlog

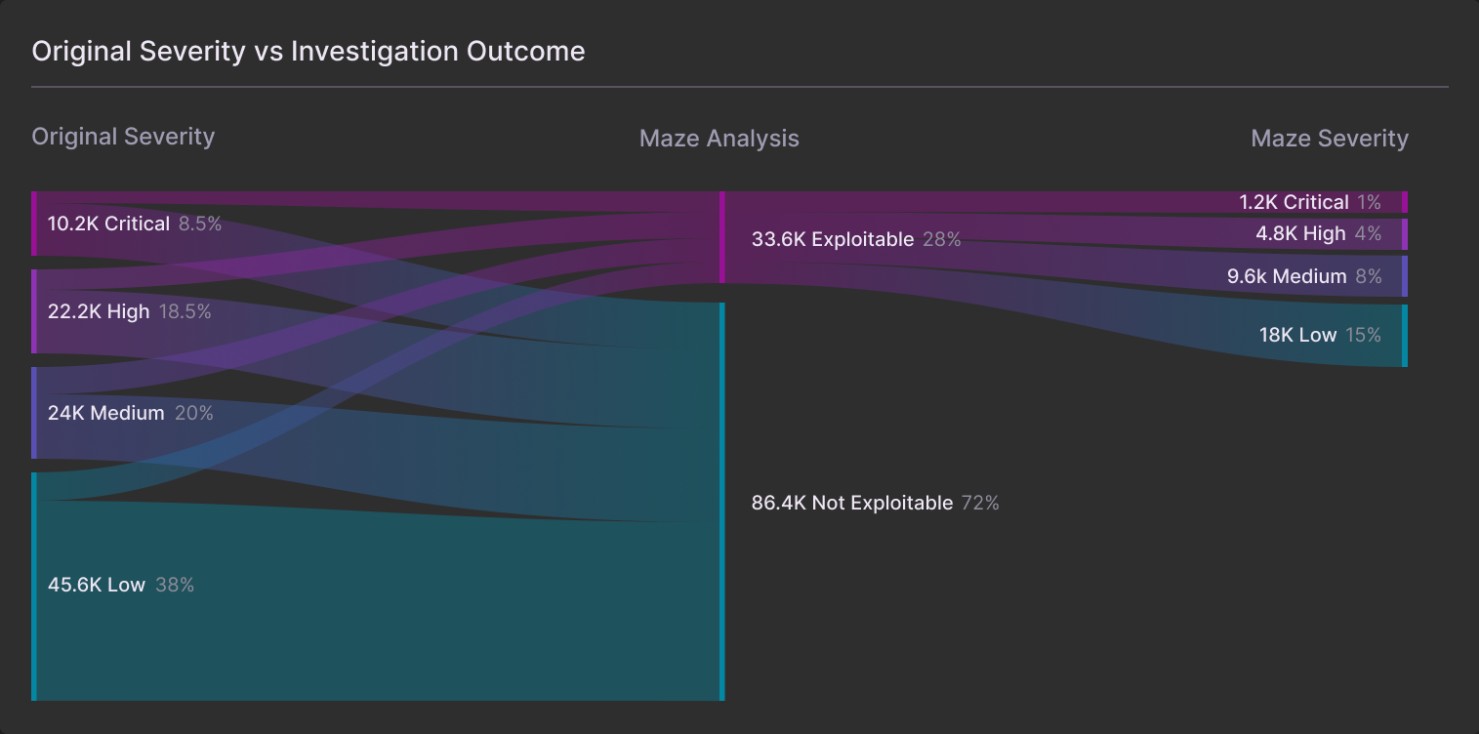

Let's start with a number that should make us all frustrated: based on our data, anywhere from 70-90% of the vulnerabilities in your environment aren't technically exploitable.

Not "probably won't be exploited." Not "low risk." They literally cannot be exploited. Not under any circumstance. Not in your environment with your specific configuration and controls.

But you're still spending time on them because your scanner doesn't know the difference between a vulnerability that looks like it might be there and a vulnerability that can actually hurt you.

The Industry's Exploitability Problem

In security, we're often sloppy with terminology, and sometimes vendors twist definitions in their favor. Case in point: exploitability.

The industry has been convinced that "exploitable" means "has this been seen exploited somewhere in the world?" That definition has serious problems.

First, we have terrible data on what's actually been exploited in the wild. Even in normal times, there's a significant lag between when vulnerabilities are disclosed and when exploitation data becomes available. If your security team waits for vulnerabilities to be exploited somewhere else before acting, you’re already behind. Sure, it should be a consideration when determining severity, but it shouldn’t be the only deciding factor.

Second, and more importantly, that's just not what exploitability should mean.

The real question is: can this vulnerability be exploited in my environment?

Not "is it being exploited somewhere else," but "can it be exploited here, in my infrastructure, with my configuration?"

When you look at a vulnerability in the context of a specific asset in a specific environment, nine times out of ten, there's zero chance it will ever be exploited. Not because of anything external, but because of how that asset is configured, how your infrastructure is set up, and a dozen other factors that make exploitation technically impossible.

That should be the true definition of exploitability. Not some feed about what's happening in the wild that we can't fully rely on (and that’s probably a few weeks too old).

Most tools ask: "Is there an exploit available in the wild?" That's helpful information, but it's not the question that matters most for your environment.

We ask: "Could an attacker actually exploit this vulnerability based on the context of your environment?”

That's a completely different question. And it requires an entirely different approach.

How Maze Works

We start by ingesting every finding from whatever scanners you’re using to find cloud vulnerabilities. They're great at finding vulnerabilities. We're great at investigating them and fixing the ones that matter.

AI Agents Change Everything

Here's where the concept of AI agents changes what's possible.

Until now, vulnerability management and cloud security tools have been built around rules and scores. Typically, they’ve combined risk scores that have no context about your business, like CVSS and EPSS, with business logic that has been defined up front by humans. For example, a traditional tool might take the CVSS score for a CVE, then ‘contextualize’ the risk to your environment by layering on rules like "Is this asset public-facing?" or "Have you tagged this asset as a ‘crown jewel’?”. But these are just filters, rules that capture only a tiny fraction of the context needed to assess the risk fully. Importantly, rules can almost never understand enough context to identify false positives that cannot be exploited. It’s like shuffling a deck of cards, knowing that 90% of the cards should never be in the pack in the first place.

For the first time in security, large language models (LLMs) give us the ability to build systems that can analyze data and reason at the level of a human analyst. AI Agents give us the ability to string LLMs together to use that intelligence to achieve complex security investigations and tasks.

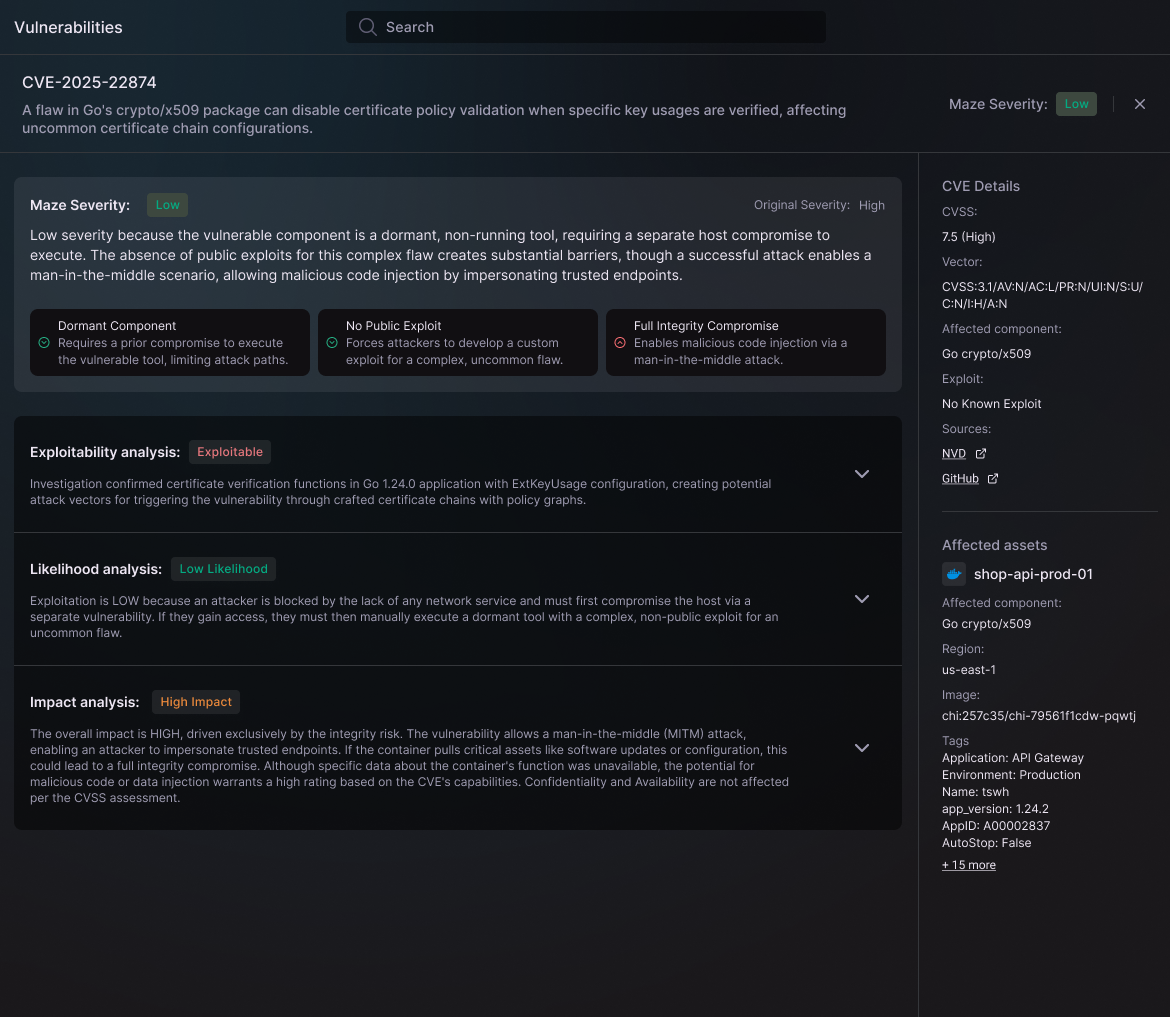

Instead of fixed logic that asks predefined questions, our AI agents go deep on each vulnerability, examining prerequisites, mitigating factors, the surrounding context, and multiple layers of configuration. It's not about having more datapoints to filter on. It's about actually interpreting what those signals mean together, dynamically, the way a security engineer would during an investigation.

What About Runtime?

You might be thinking: don’t you need to understand the live state of the infrastructure to do this properly? If so, will I need to install a sensor or eBPF agent?

Yes, we need live context, but no, Maze doesn't need a sensor. Runtime context is crucial for understanding vulnerabilities properly, but with Maze, there is nothing to install.

Our AI agents gather runtime context, including deep insights into containers, without requiring a sensor or eBPF agent. The magic here is the flexibility and reasoning abilities of AI agents, which allow us to dynamically gather runtime context from cloud service providers without the need for a sensor.

You get all the context, with none of the overhead.

Agents Do the Work You Don't Have Time For

How would an expert security engineer investigate a vulnerability if they had infinite time to do so? That’s the question we asked ourselves repeatedly as we built Maze, and it is the simplest way to understand how the system works.

We built a multi-agent system in which each specialized agent has access to specific tools, carefully selected to keep them focused and effective. Think of it like building a house: you don't give all the tools to one person and hope for the best. You have an electrician, a plumber, and a carpenter, each with their own expertise.

Our agents work the same way.

Here's what that looks like in practice:

Let's say your scanner finds a Linux kernel vulnerability. Some tools might flag it as "Critical" because it’s a high-severity CVE, or maybe because it triggers prioritization rules, e.g., it’s on an internet-facing asset.

Our agents go deeper:

- They research the vulnerability using trusted sources (NVD, VulnCheck, and more) and understand that this specific vulnerability requires hitting a particular function to exploit it

- They check your configuration and see you're running on Intel architecture

- They pull documentation showing that the vulnerable subsystem isn't used in the Intel architecture

- They confirm with actual evidence from your infrastructure that the required function literally doesn't exist in your environment

Result: Not exploitable. Not even if the attacker already had a foothold in your environment.

That investigation happened in minutes. Without any human intervention. And with complete transparency into every step of the reasoning.

We run investigations concurrently across millions of vulnerabilities and asset pairs, giving you a near-perfect understanding of your vulnerability backlog, as if you had thousands of analysts working on it.

Severity, as a Human Would See It

There are, of course, always some vulnerabilities that we cannot rule out as not exploitable. But just because they are theoretically exploitable usually doesn’t mean they’re worthy of attention.

For the majority of exploitable vulnerabilities, factors in your environment make them either near impossible to exploit or have almost zero impact if exploited.

Again, the analogy here is ‘how would an expert security engineer assess severity, if they had all the time in the world to investigate this?’. The key is having AI agents reason about how all the different context fits together, rather than relying on rules to approximate the risk. For example, an asset’s exposure to the internet might make a vulnerability more critical or could be completely irrelevant, depending on what attackers need to do to exploit the vulnerability.

We ask the agents two simple questions: how likely is this vulnerability to be exploited based on the exact context of my environment, and what would the business impact be if it were?

What This Means For Your Team

One of our customers described it like this: "It's like hiring 50 security analysts whose only job is to look at vulnerabilities, multiple times a day."

Instead of thousands or millions of findings to manually triage, you're presented with only the few that actually matter. The ones where an attacker could do real damage in your environment. The ones where you need to act.

And when you do need to act, we give you everything you need:

- Exactly what commands we ran

- How we analyzed the vulnerability

- Why it is or isn't exploitable

- Where it lives in your infrastructure

- How can it be fixed

- Who’s responsible for fixing it

- Complete transparency into the reasoning

And, we live where your developers live too, by pushing that directly to Jira, ServiceNow, or anywhere else you work.

The vulnerabilities flow to us, we figure out what matters, and the important ones flow to your team with all the context they need.

What About My Auditors?

In regulated environments, vulnerabilities usually have strict patching timelines. Critical vulnerabilities in 7 days. High in 30 days. Your auditor doesn't care that most of those "critical" vulnerabilities can't actually be exploited in your environment.

Until now, you had two options:

- Waste enormous time patching things that don't matter

- Spend enormous time manually proving to auditors why you didn't patch them

Maze gives you a third option: we've already done the analysis and gathered the evidence, so why don’t we fill out your exception process for you? We offer one-click exports in the format you need (or push it to your tool) to prove why you’ve ignored specific vulnerabilities, saving everyone a huge amount of time.

"But Shouldn't We Just Patch Everything?"

In a perfect world with infinite resources and zero risk of breaking production with patches, sure, you'd patch every vulnerability immediately.

But we've never seen an organization with zero vulnerabilities. We've never seen a team with enough people to manually triage everything. AI isn’t ready to auto-fix everything yet.

What we have seen is talented security teams burning out trying to do the impossible. And we've seen organizations getting breached because the few real vulnerabilities got lost in the noise.

Lowering your risk isn't about achieving zero vulnerabilities. It's about making sure the vulnerabilities that could actually hurt you are the ones you fix.

We're Live

Maze is here. Everything we’ve talked about here is actively running in customers’ production environments right now. We're analyzing real infrastructure, investigating real vulnerabilities, and helping security teams focus on what matters. And yes, we’re using AI agents that actually work.

If you're tired of drowning in vulnerabilities, get in touch.

Your team deserves better than a 100,000+ item backlog and crossed fingers.

Ready to see what vulnerability management looks like when AI does the investigation? Book a demo to see Maze in action.